Digital assessment offers route to developing computational thinking outside computing classrooms

Researchers have trialled a new approach to testing students’ ‘computational thinking’ in terms which go far beyond the programming skills learned in computing lessons.

Researchers have trialled a new approach to testing students’ ‘computational thinking’ in terms which go far beyond the programming skills learned in computing lessons.

Computational thinking is a relatively new goal of many education systems around the world and features particularly prominently in computing education. The national curriculum in England, for example, requires students to be able to “analyse problems in computational terms” and have “repeated practical experience of writing computer programs in order to solve such problems”.

Outside the school system, however, computational thinking tends to refer to something more complex: a richer set of cognitive skills, linked to problem-solving, which may or may not involve computer programming.

The new University of Cambridge study tested an assessment called the ‘Computational Thinking Challenge’ (CTC) which, similarly, attempts to measure computational thinking as a general capacity – and not just programming ability.

The analysis, which trialled the CTC with more than 1,100 secondary pupils in the UK, showed that it is possible to assess students’ computational thinking as a broader ‘competency’, suggesting it can be cultivated across the curriculum, beyond computing lessons alone.

There is a good case for seeing computational thinking as a more complex 21st-century demand: a general competency that enables people to solve real-world problems.

Dr Rina Lai from the Faculty of Education, University of Cambridge, who led the research and development of the CTC during her doctoral research, said: “A lot of people assume that computational thinking refers to computing skills, but that’s a very narrow definition. There is a good case for seeing it as a more complex 21st-century demand: a general competency that enables people to solve real-world problems. Education should encourage computational thinking in this wider sense.”

Michelle Ellefson, Professor of Cognitive Science at the Faculty, and a co-author, said: “The assessment we tested here can be used by teachers to assess their students’ underlying computational thinking skills in all sorts of subjects. It’s about far more than whether they can write programs in Python or C.”

Beyond education, computational thinking is often understood in these terms. In fields such as computational biology, neuroscience and linguistics, it is referred to as a set of cognitive skills and abilities which, for example, include breaking a problem down into its constituent parts, identifying comparable challenges, and sorting through large quantities of information.

“This broader conceptualisation is often neglected in formal learning because it’s difficult to understand and measure,” Lai said. “The upshot is that there’s a disjuncture between what we want at a policy level, or in workplaces, and what’s happening in schools.”

The CTC aims to address this by providing teachers with a tool for measuring students’ overall computational thinking competency. Lai hopes to continue developing it and make it freely available online for teachers.

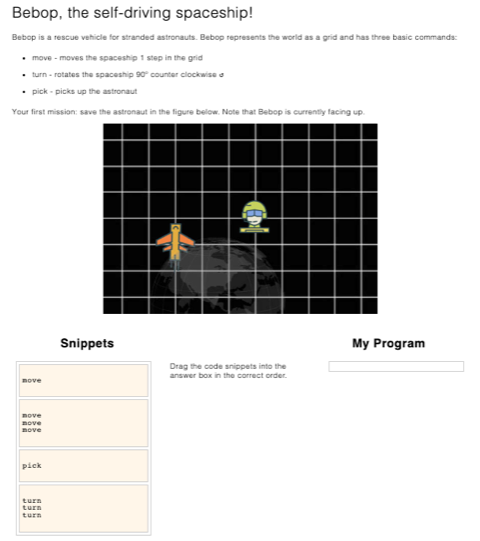

The CTC is a computerised assessment consisting of 14 questions that encapsulate problem-solving and programming elements. Crucially, these are presented in normal language that can be understood by non-coders. For example, in one question, students have to choose a set of instructions that will help a spaceship navigate across a map. These are written in plain English rather than programming language. One typical such instruction reads: “If front is blocked: turn. Otherwise: move.”

Upon completion, students receive personalised feedback regarding their performance, which encourages them to apply computational thinking in other contexts.

In their analysis, Lai and Ellefson examined the extent to which this test measures computational thinking as a multidimensional construct, beyond students’ programming skills.

A sample group of 1,130 secondary school pupils took the test and the researchers conducted several in-depth analyses of the resulting data. First, they compared how well this fitted with two different models. One represented computational thinking as a unidimensional construct and the other represented it as a multidimensional construct, consisting of both programming and non-programming skills.

The data clearly fitted the multidimensional model more closely and appeared to be providing a picture of computational thinking as an overall competency as intended. “We needed to know this because, while you can design a test to be multidimensional, having the evidence it works at this level is another matter,” Lai said.

The researchers then investigated how the test was working in psychometric terms, and whether, as well as providing an aggregate score for students’ computational thinking competency, it also provided a more detailed picture of their programming and non-programming, problem-solving skills.

It tells us something more about students’ computational thinking skills than a curriculum-aligned assessment could.

This has implications for how computational thinking assessments are designed. Traditionally, these tests tend to use the same type of question, whereas the CTC combines different question formats and types in an effort to tap into both programming and non-programming skills. The analysis showed that the score the CTC generates reliably reflects the complexity of computational thinking, incorporating measures of these different elements.

Although the CTC is still in development, the study shows it is possible for teachers to measure and develop the broad, computational thinking skills of students beyond the narrower construct that is typically applied in educational settings.

“It tells us something more about students’ computational thinking skills than a curriculum-aligned assessment could,” Lai said. “Because it doesn’t tap into what students are learning in computing education alone, it opens the door to assessing and growing this crucial competency in other contexts and subject areas as well.”

The research is published in the Journal of Educational Computing Research.

Images in this story:

Teacher helping students working at computers in a classroom. Credit: City of Seattle Community Tech Photostream. Licensed under CC BY-NC 2.0)

Example exercise from the CTC in which students are required to work out how to programme a spaceship to navigate to an astronaut using natural language. (Image courtesy of Rina Lai)