Science journals update guidelines after study highlights incomplete reporting of research

Several scientific journals have amended their submission guidelines after an analysis identified numerous research studies that had been published with crucial information missing.

The finding emerged from an analysis by academics at the University of Cambridge, which reviewed reports from trials evaluating new school-based programmes to increase the amount of children’s physical exercise. It found that 98% of these reports left out key details about how teachers had been trained to deliver the interventions.

The trial reports were published in 33 academic journals between 2015 and 2020 and collectively covered evaluations involving tens of thousands of pupils in hundreds of schools internationally.

The reports omitted key details such as where teachers had been trained to deliver the interventions, how that training had been provided, and whether it was adapted to meet the teachers’ needs and skills. Since each of these details are thought to influence the success of a trial, the new analysis argues that the published reports are of limited scientific use.

Incomplete reporting also presents a challenge to anyone interested in implementing these interventions in other settings. Policy actors, practitioners and other researchers wishing to do so depend on studies being fully reported. The research team contacted 32 academic journals about their findings. Seven have since altered their submission guidance to encourage better reporting; the remaining 25 have yet to do so.

Inadequate intervention reporting is a widespread problem. This is preventing meaningful progress for researchers and practitioners.

The initiative was led by Mairead Ryan, a doctoral researcher at the Faculty of Education and Medical Research Council Epidemiology Unit, University of Cambridge. It was supported by Professor Tammy Hoffmann (Bond University, Australia), Professor Riikka Hofmann and Dr Esther van Sluijs (both University of Cambridge). Ryan and colleagues have published a commentary about the initiative, and the need for action from editors, in the journal, Trials.

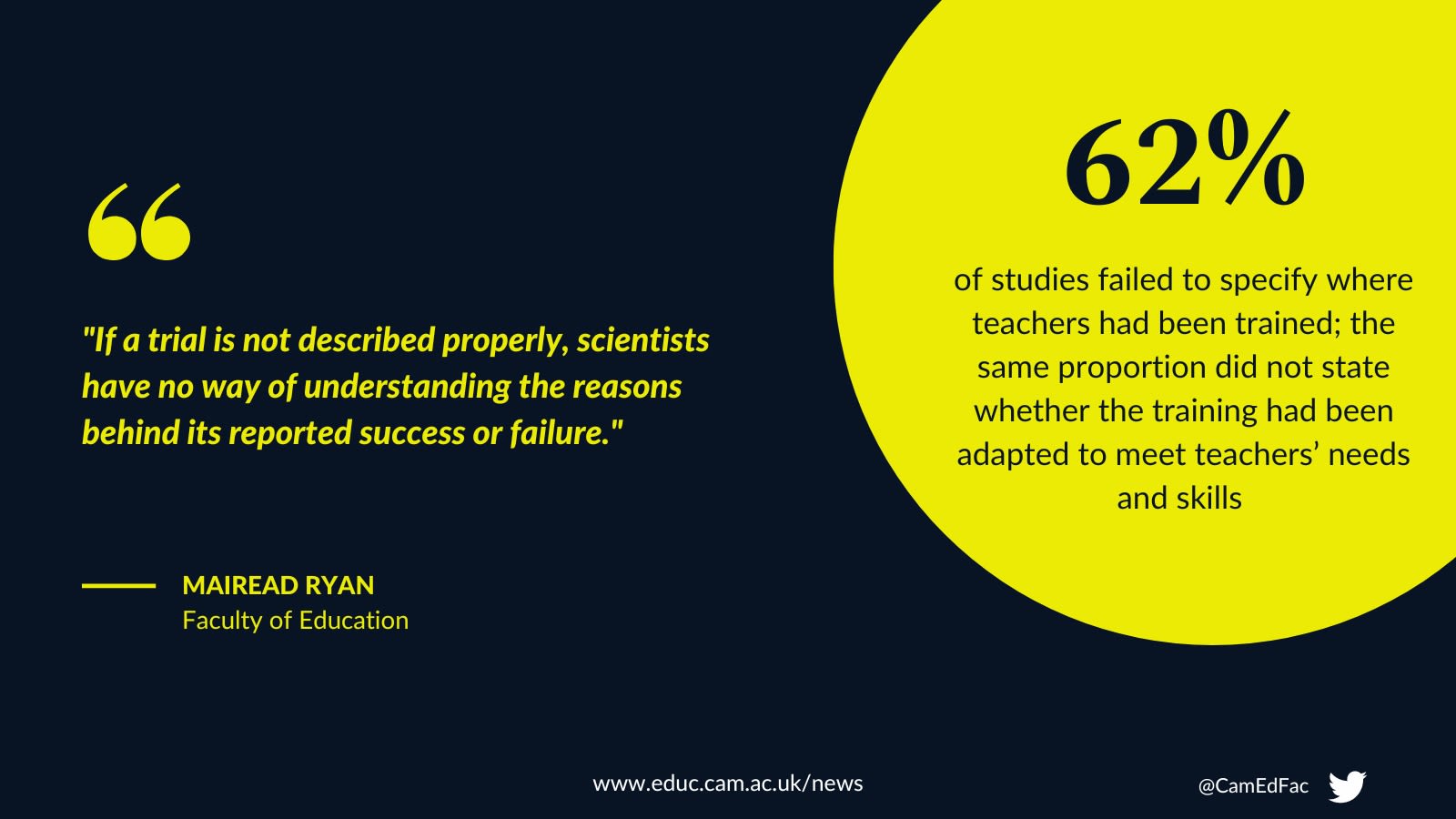

“Inadequate intervention reporting is a widespread problem,” Ryan said. “If a trial is not described properly, scientists have no way of understanding the reasons behind its reported success or failure. This is preventing meaningful progress for researchers and practitioners.”

Free checklists are available to researchers to ensure full reporting. “Unfortunately, most of the journals we looked at did not encourage the use of reporting checklists for all intervention components in their submission guidelines,” van Sluijs said. “We would urge journals from all academic fields to review their guidance, to ensure a scientific evidence base that is fit for purpose.

The researchers examined whether reports from 51 trials of physical activity interventions for children and adolescents had recorded the information stipulated in one such checklist: the Template for Intervention Description and Replication (or TIDieR). TIDieR was introduced in 2014, specifically for intervention research. It provides a minimum list of recordable items required to ensure that findings can be used and replicated.

Alongside the main reports, the team analysed related documents – such as protocols, process evaluations, and study websites – to see if these captured information specified in the TIDieR checklist. They analysed 183 documents in total.

Despite this thorough search, they found significant gaps in how the interventions were reported in 98% of cases.

Very basic information was often missing. For example, 62% of studies failed to specify where the teachers had been trained; the same proportion did not state whether the training had been adapted to meet teachers’ needs and skills, and 60% did not mention whether teachers were trained in a group or individually. As evidence suggests that each of these points can influence how successfully an intervention is delivered, their inclusion in a research report is essential.

We know that the quality of reporting is better in journals that endorse these checklists. Researchers should be encouraged to use them when submitting their work.

When the research team assessed the submission guidelines of the academic journals that had published these reports, they found that just one encouraged the use of reporting checklists for all intervention components.

They then contacted each journal’s Editor-in-Chief (or equivalent) and asked them to update their guidelines. To date, 27 have responded. Seven have updated their guidelines; others are still in discussion with their editorial teams and publishers.

One of the journals that acted in response is the British Journal of Sports Medicine. The journal’s Editor-in-Chief, Professor Jonathan Drezner, said: “Required reporting guidelines for intervention trials ensure that interventions are fully described and replicable. I am grateful to the researchers who advocated for a more rigorous and consistent reporting standard that ultimately raises the quality and scientific value of the clinical trials we publish.”

The authors hope more journals will follow suit. They point out that the 2013 Declaration of Helsinki, which lays out ethical principles for medical research involving human subjects, identifies full reporting as an “ethical obligation” for researchers, authors, editors and publishers.

Ryan believes that journal guidelines can play a major role in changing the status quo. “We know that the quality of reporting is better in journals that endorse these checklists,” she said. “Researchers should be encouraged to use them when submitting their work.”

Image in this story: ds_30, PIxabay.